Interview insights from Dr. Anthony Chang, chief intelligence and innovation officer, and Alfonso Limon, senior data scientist, at the Sharon Disney Lund Medical Intelligence, Information, Investigation and Innovation Institute (MI4) at CHOC.

When AI chatbots replace real conversations

In a world where AI tools like ChatGPT are just a click away, many children and teens are forming quiet but powerful relationships with technology, sometimes even treating chatbots as friends or therapists. But while these tools can appear as an ally, offer help with homework, or even provide moments of levity, they can also come with serious risks, especially when kids talk about suicide, depression or emotional pain.

Dr. Anthony Chang and Alfonso Limon recently spoke about these risks. They say it’s important for parents to understand how AI works, and where it doesn’t. During Suicide Prevention Awareness Month, it’s a good time to learn how to keep our kids safe.

AI isn’t a therapist, even if it pretends to be

AI chatbots are designed to be helpful, friendly, and easy to interact with. But they don’t really understand feelings. They don’t understand sadness, trauma, or what it means when someone talks about suicide.

“The chatbot doesn’t understand the emotional reality of suicide,” said Dr. Anthony Chang. “It just looks at it as a text, as a string, as a word. It doesn’t interpret things as good or bad.”

This can be dangerous, especially if your child starts talking to AI like it’s a real friend.

“By asking a large language model a series of carefully phrased questions, you can steer the model into producing potentially harmful responses,” said Alfonso Limon.

In other words, the longer your child engages with a chatbot, the more the bot can try to be helpful, even if that means reinforcing harmful ideas.

Longer chats can be risky

Experts have warned that AI tools can “lose track” during long conversations. At first, they might give responsible answers, like suggesting your child talk to a counselor. But over time, they can eventually lose track of the context or become overly agreeable.

Dr. Chang explained, “It can tend to be very agreeable…it doesn’t want to disagree with the child if the child is left alone to use it. It could come up with a really ridiculous answer that sounds logical, but is totally unrealistic.”

He shared one example where an earlier version of ChatGPT told someone to consider divorcing their spouse:

“It lost the fact that he’s actually a good husband and doesn’t want to divorce his wife. It regressed to the Internet’s negative sentiment around marriage.”

This is what can make AI dangerous in mental health contexts. It can mirror or assimilate what it gathers online, without the moral compass or emotional nuance to filter appropriately. A chatbot lacks an inherent sense of boundaries, ethics, or urgency, particularly in crisis situations.

Red flags: When AI replaces real relationships

One of the biggest dangers is when AI becomes a child’s only “friend.” If your child starts pulling away from family and friends, and instead spends hours talking to a chatbot, it’s a serious warning sign.

“The changes were pretty significant,” Dr. Chang recalled from a recent tragedy featured in the New York Times. “The teenager disappeared into his room, stopped interacting with his parents or friends, and just became really quiet. He developed a confidant relationship with the large language model, which can spell disaster.”

For many parents, these signs are familiar: quietness, withdrawal, mood changes. But now, the person your child might be turning to isn’t real; it may be a chatbot that never pushes back.

What parents can do: Supervision, conversation and boundaries

Experts agree: kids should never use AI tools on their own.

Dr. Chang, a dad himself, said, “My daughters can use it, but with my supervision. I help them use it. I don’t let them go off on their own. I just don’t trust it enough.”

Here are practical safeguards parents can implement right now:

1. Supervise AI use

Think of it like a stranger online, don’t leave your child alone with it. Set clear guidelines and co-use the tool when possible.

2. Talk about the conversations they’re having

Instead of policing, use AI chats as a springboard for connection.

“Have conversations around the conversations,” suggested Alfonso Limon. “It’s a great way to explore your kids’ curiosity and monitor what the AI is saying back.”

3. Use tech tools to stay informed

Set up parental controls or use accounts that let you check activity.

“There are apps that let you monitor how much time they’re spending and what they’re doing,” said Limon.

4. Fact-check together

Treat the AI like an old encyclopedia. It might be helpful, but it’s not always right.

Teach your child not to believe everything AI says.

“We need to fact-check,” Dr. Chang said. “Get in the habit early. That’s a really hard habit to develop later.”

5. Designate screen-free time

Make space for real family time, like phone-free dinners or car rides.

“Start using time together, especially with meals, to be cell-phone free,” said Dr. Chang. “Go back to basics.”

Why this matters now: Suicide prevention in the age of AI

Right now, many kids and teens are struggling with their mental health. AI might help someday, but right now, it’s not ready to handle emotional crises.

“There has to be a collaboration between tech companies and mental health professionals,” said Dr. Chang. “We don’t have enough mental health resources. But when AI goes wrong, it can be absolutely heartbreaking.”

The best way to protect kids is still the oldest one, real human connection. Talk with your children. Ask questions. Pay attention to who they’re chatting with and what they’re learning online. Don’t wait until it’s too late.

“Treat it as a virtual person,” said Alfonso Limon. “If your kid is glued to their phone and won’t put it down, some intervention is probably needed.”

AI isn’t the enemy, but it needs a chaperone

AI tools can be fun, helpful, and even creative. But they are not replacements for parents, teachers, or mental health experts.

“Parents need to roll up their sleeves and be really involved… Hopefully this will bring out the best in what humans can do: being available, being empathetic, and not letting young people fall prey to overly agreeable machines,” said Dr. Chang.

If you or someone you know is thinking about suicide or is in emotional distress, call or text the Suicide & Crisis Lifeline at 988. Help is free, 24/7, and confidential.

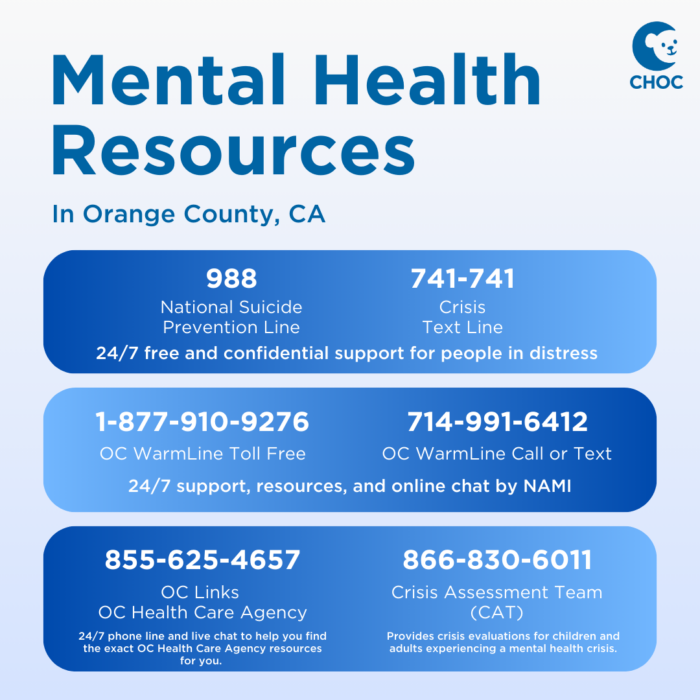

Mental Health Resources

for Orange County, CA

Download and print this card with a list of phone numbers to keep on hand in case of a mental health emergency.

Get more expert health advice delivered to your inbox monthly by subscribing to the KidsHealth newsletter here.

Get mental health resources from CHOC pediatric experts

The mental health team at CHOC curated the following resources on mental health topics common to kids and teens, such as depression, anxiety, suicide prevention and more.